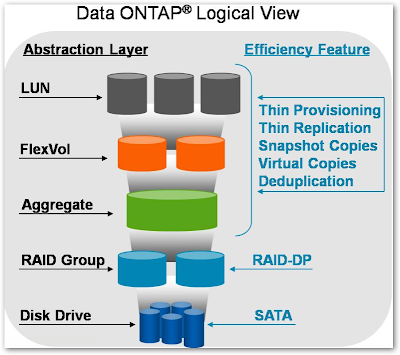

NetApp's Aggregate is a DataONTAP feature that combines one or more Raid Groups(RG) into a pool of disk space that can be used to create multiple volumes in different flavors. Newly added disks are assigned to the spare pool and a new aggregate or an existing aggregate that requires more space will then be pulled from the spare pool.

Unlike other Storage arrays where you create LUN from a specific RG, DataONTAP creates volumes within the available space in the aggregate across multiple RG. DataONTAP dynamically stripes the volumes across the aggregates within the Raid Groups. When disks are added to the aggregate they will either go into an existing RG that is not yet full or into a new RG if all the existing groups are full. By default RG are created as RAID-DP(dual parity drives) with stripes of 14+2 for FC/SAS disks and 12+2 for SATA disks. If you want to separate your "Vol0" in a separate aggregate, usually its aggr0 with a minimum of 3 disks for RAID-DP.

Aggregates have snapshots, snap reserve & snap schedule just like any other volumes. Aggregates may either be mirrored or unmirrored. A plex is a physical copy of the WAFL storage within the aggregate. A mirrored aggregate consists of two plexes;(A plex may be online or offline unmirrored aggregates contain a single plex. In order to create a mirrored aggregate, you must have a filer configuration that supports RAID-level mirroring. When mirroring is enabled on the filer, the spare disks are divided into two disk pools. When an aggregate is created, all of the disks in a single plex must come from the same disk pool, and the two plexes of a mirrored aggregate must consist of disks from separate pools, as this maximizes fault isolation.

An aggregate may be online, restricted, or offline. When an aggregate is offline, no read or write access is allowed. When an aggregate is restricted, certain operations are allowed (such as aggregate copy, parity recomputation or RAID reconstruction) but data access is not allowed.

Creating an aggregate

Aggregate has a finite size of 16TB raw disk with 32 bit DataONTAP. By default, the filer fills up one RAID group with disks before starting another RAID group. Suppose an aggregate currently has one RAID group of 12 disks and its RAID group size is 14. If you add 5 disks to this aggregate, it will have one RAID group with 14 disks and another RAID group with 3 disks. The filer does not evenly distribute disks among RAID groups.

To create an aggregate called aggr1 with 4 disks 0a.10 0a.11 0a.12 0a.13 from a loop on fiber channel port 0a use the following command:

netapp> aggr create -d 0a.10 0a.11 0a.12 0a.13

Expanding an aggregate

To expand an aggregate called aggr1 with 4 new disks use the following command:

netapp> aggr add aggr1 -d 0a.11 0a.12 0a.13 0a.14

Destroying an aggregate

Aggregates can be destroyed but there are restrictions if volumes are bounded to the aggregate. Trying to destroy an aggr with volumes will throw an error but this can be overridden with the -f flag. It is recommended to go over each and every volume and destroying it before using the -f option to avoid any potential data loss. Aggregates can be destroyed much like any volumes but it needs to be taken offline.

To destroy an aggregate aggr1 use the following command:

netapp> aggr offline aggr1

netapp> aggr destroy aggr1